-

Notifications

You must be signed in to change notification settings - Fork 311

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Graph4Rec mpirun方式 多机CPU 分布式 启动失败 #518

Comments

|

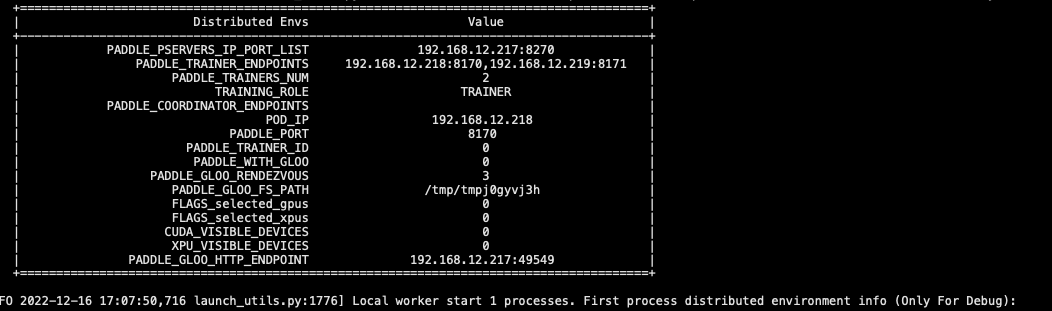

手动方式可以起成功 |

|

1、ips配置如下: |

|

分布式的话 返回的loss 不是应该是 数组结构么 这是不是还是单机? |

这里应该是有问题的,代码里面有很多barrier_worker的,worker之间是会有通讯的。 |

Graph4Rec mpirun方式 如何起多机CPU

The text was updated successfully, but these errors were encountered: