Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

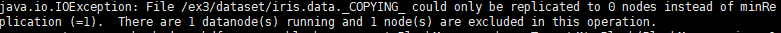

今天在做这个实验的时候,出现了一个问题,具体报错是这样的:

解决办法如下: (1)用jps和hdfs dfsadmin -report命令查看进程和各节点是否正常 (2)查看hdfs元数据目录,确保NameNode和DataNode节点的VERSION文件中CLUSTR_ID等数据一致。

(3)如果都正常修改配置文件hdfs-site.xml ,添加如下配置:

在做了这些之后还是不通会遇到如下错误: 这就说明datanode的50010端口没有打开,注意是datanode 不是namenode:所以就需要在datanode的安全组里面把50010端口添加进去,如图所示: 然后重新上传这个文件就成功了。

The text was updated successfully, but these errors were encountered:

你好,想请问第一点,什么样的结果才算的正常的呢,第二点,怎么查看hdfs的元数

今天在做这个实验的时候,出现了一个问题,具体报错是这样的:

解决办法如下:

(1)用jps和hdfs dfsadmin -report命令查看进程和各节点是否正常

(2)查看hdfs元数据目录,确保NameNode和DataNode节点的VERSION文件中CLUSTR_ID等数据一致。

(3)如果都正常修改配置文件hdfs-site.xml ,添加如下配置:

在做了这些之后还是不通会遇到如下错误:

这就说明datanode的50010端口没有打开,注意是datanode 不是namenode:所以就需要在datanode的安全组里面把50010端口添加进去,如图所示:

然后重新上传这个文件就成功了。

The text was updated successfully, but these errors were encountered: