-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Server performance with multiple camera sensors #3836

Comments

|

Another thing you might have to look out for is IO bottlenecks when saving allot of data to disk. We tried running several captures on a local non-SSD (i.e. magnetic drive) and a (remote) NFS share and ran into bottlenecks. |

I'm not saving the image data to the disk, but streaming it. |

|

Hello! we noticed a similar behaviour, when using multiple RGB cameras (3). The server frame rate drops to 5 FPS, every camera "costs" 15 frames/sec. :( When additional traffic actors are spawned, the situation gets even worse. We use a RTX3070, which is utilized by 25%. (edit: same on a RTX 2060, usage is a little bit more, but much unused...) Carla keeps much of the resources unused... Any ideas? Regards, |

|

@bernatx could you have a look at this please? |

|

Hello! I am also experiencing a similar problem with LiDAR sensors. I am using an RTX 5000 GPU. Scenario and settings: Server FPS (appx): GPU Utilization (appx): Carla is not utilizing the resources completely. Any ideas? Regards, |

|

Hi! |

|

We found that the performance running carla on Ubuntu 20.04 is better, but the main problem still exists.... |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

Hi, we're experiencing similar issues and found out using Wireshark, that at high data bandwith the boost TCP communication is the bottleneck, especially when running in sync mode. The larger the data, the more packages need to be sent - we think that shared memory communication could help tackling this issue. PS: Our use case involves a modified rgb camera sending 4K float images to be displayed on a Dolby Vision TV, so we're really sending tons of packages. |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

|

Hi,we are also experiencing similar problem.Is there any update information? |

|

@dongkeyan Carla 0.9.14 has be published. It add multi-gpu feature, which is very helpful for rendering performance. |

|

@JosenJiang would you be willing to post some statistics on multiple GPU usage? I am planning to purchase some extra GPU-s for multiple RGB sensor rendering. What sort of FPS are you getting with different sensor setups? 2, 3, 4, 5, 6 cameras. Thank you. |

|

@JosenJiang That would be very interesting for me, too! |

|

same question. I wonder whether can carla use GPU up to 100% utils to increase performance? |

CARLA Version: 0.9.10

OS: Linux Ubuntu 18.04

Hello,

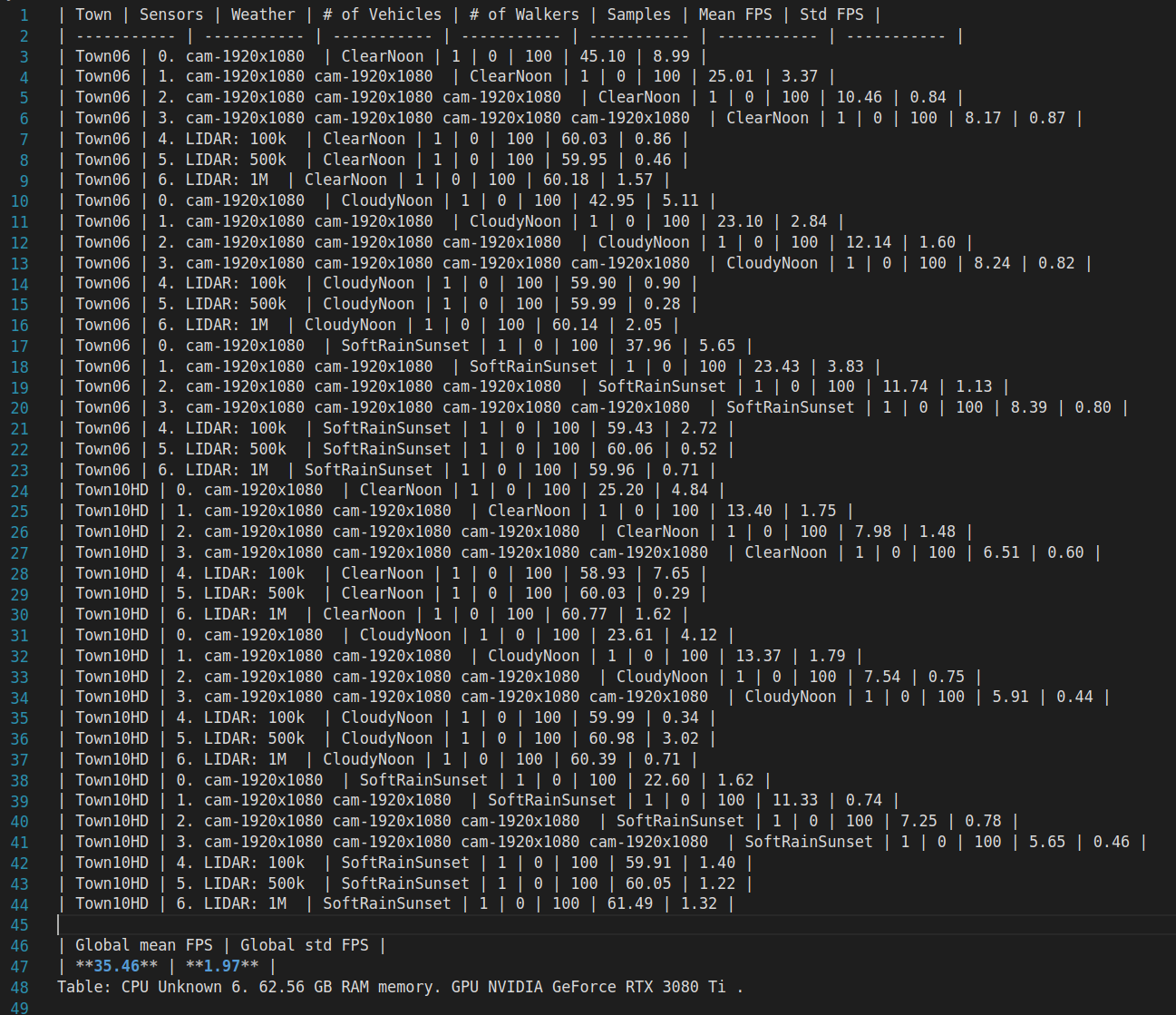

I am interested in using CARLA to create multiple camera sensors and render all their perspectives at a high resolution, for instance 1920x1232 pixels. For this reason, I have run some performance tests to assess the performance of the simulator according to the number of cameras. The client used to connect to the server and create the camera sensors was programmed in C++.

The tests were performed in a i9-9900K CPU@3.60GHz; 32GB RAM; RTX 2080Ti. The simulation was running on epic mode since the graphic quality is important.

At first, the server was running with 60 FPS with one client but no sensor. It immediately dropped to 30 FPS with the creation of one camera sensor. With two camera sensors, it dropped to 20 FPS. And with three camera sensors, approximately to 15 FPS. When the desired number of 4 camera sensors was include on the simulation the FPS dropped to around 13 FPS.

I also experimented reducing the sensor resolution and increasing the number of camera sensors in a way that the total number of generated pixels covered the same area as for 4 cameras. For instance: 4x1920x1232 = 16x960x616. So, 16 cameras were created with a resolution of 960x616 and the server dropped to 7 FPS. Then, with 64 cameras at 480x308, to 2 FPS.

The FPS measurements were done with a static ego and no other actor in the scenario (Town03). The results can be seen on the graph below:

We observed that the GPU memory usage (%) tend to increase as the number of cameras at high resolution increases. However, drastically increasing the number of camera sensors does not result in a higher consume of GPU resources, despite achieving the worst FPS in these scenarios. In fact, the highest number of cameras (64) with the lowest resolution consumed the least GPU memory and demanded about 70% of the GPU usage. Meanwhile, the four cameras at high resolution only consumed only 25% of GPU memory, but demanded about 90% of the GPU usage. These results can be seen on the graphs bellow:

The average CPU usage (%) remained basically constant, no matter the resolution or the number of cameras. The RAM memory usage (%) varied slightly, achieving the maximum with the four cameras at high resolution.

Having these results presented, I would like do discuss a few things:

I would appreciate your feedback.

Thank you,

Fabio Reway

Maikol Drechsler

The text was updated successfully, but these errors were encountered: