-

Notifications

You must be signed in to change notification settings - Fork 9.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Gzip is enabled and reported correctly on LH but not via PageSpeed Insights #10610

Comments

|

Thanks for filing @topherMOE! I'm not able to reproduce this issue on PSI :/ Is it still happening for you? |

|

Hey @patrickhulce, Thanks for looking at this issue. Yes. I still see the failed audit. I tired in Incognito mode as well. |

|

Thanks @topherMOE! Can I ask which rough geographic area of the world you're requesting from? That's the only obvious answer I can see left. I'm requesting from North America, central US. |

|

Otherwise I give up and cede further guesses here to @exterkamp and @jazyan |

|

Hey @patrickhulce. I'm testing this from Bangalore, India. |

|

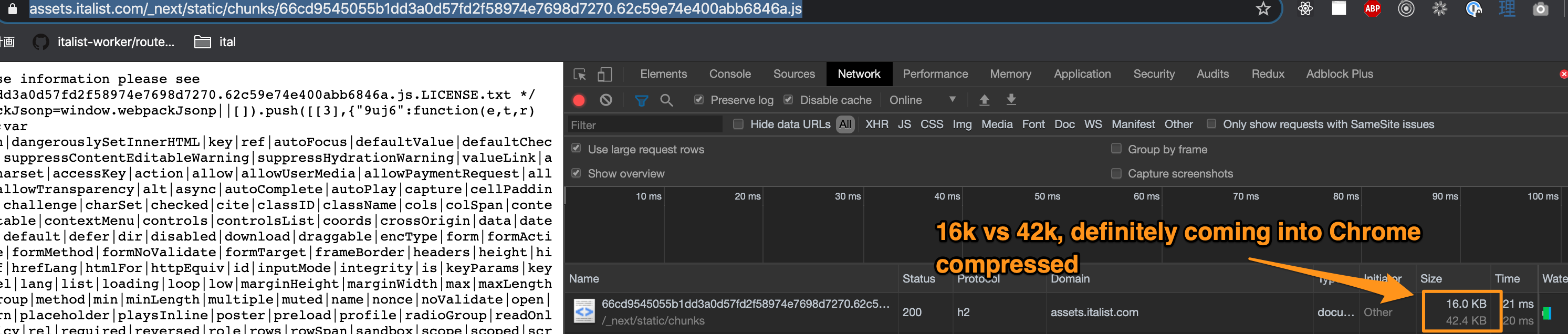

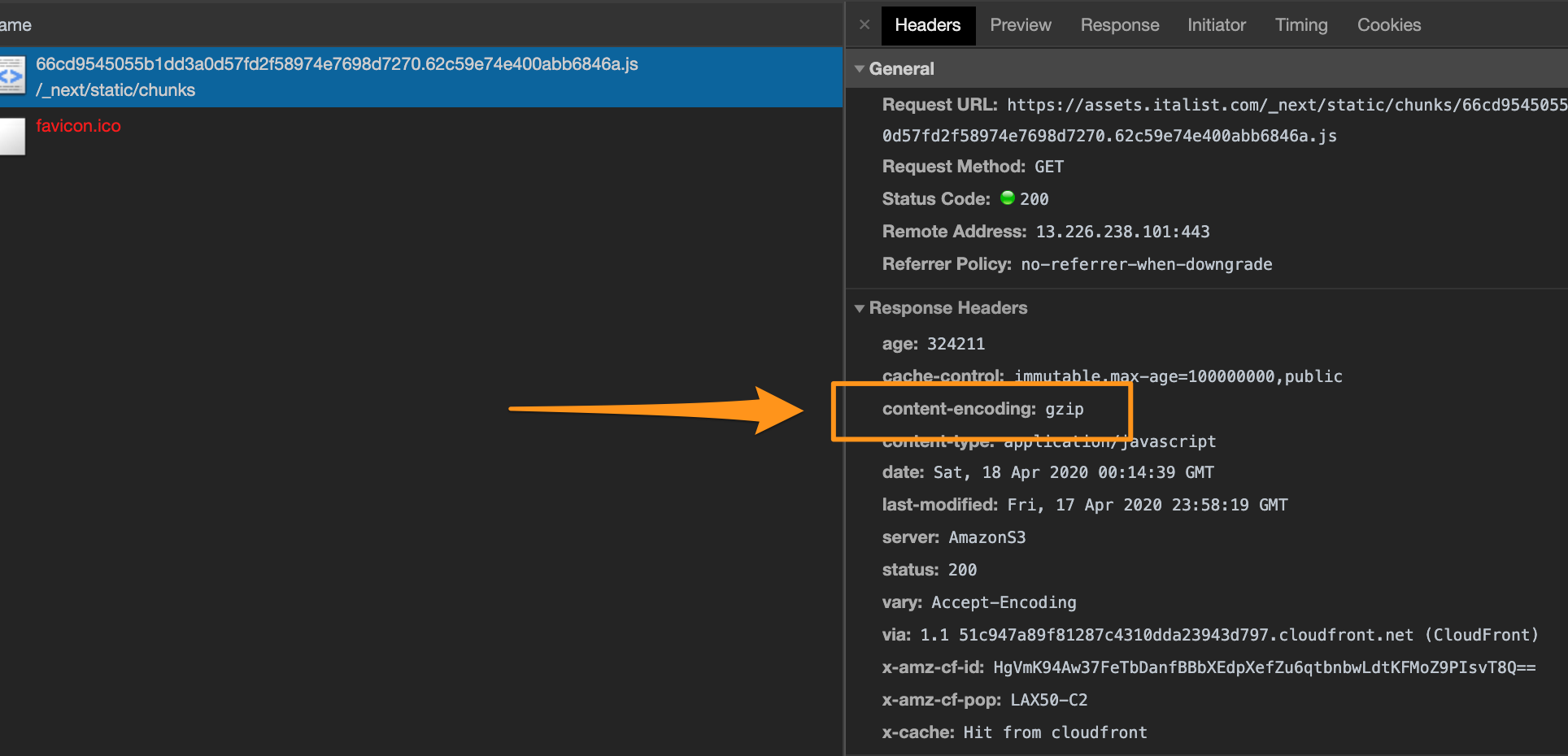

We are experiencing the same thing. In the US we are getting single-digit scores due to a supposed lack of compression. In Japan and Europe we don't see that complaint, and get a much higher score. This just started happening a couple weeks ago, with no relevant change on our side. Example URL: Chrome developer tools Network tab shows that these static resources are definitely arriving from AWS Cloudfront properly gzipped, including the correct response header. Also if I issue a curl and send the proper accept-encoding header, I always get the correct content-encoding response header back, with "gzip". $ curl -H "Accept-Encoding: gzip" -I https://assets.italist.com/_next/static/chunks/c0d53ec4.3622723325877400fbf7.js

HTTP/2 200

content-type: application/javascript

date: Sat, 18 Apr 2020 00:14:05 GMT

last-modified: Fri, 17 Apr 2020 23:58:19 GMT

cache-control: immutable,max-age=100000000,public

server: AmazonS3

content-encoding: gzip

vary: Accept-Encoding

x-cache: Hit from cloudfront

via: 1.1 b837267595110a1135bf4fb036d71e1f.cloudfront.net (CloudFront)

x-amz-cf-pop: LAX50-C1

x-amz-cf-id: 6IVRCfFP4Xlpkgkq1zkXcb06XQtCOV0Of1gZqUgnn2OGDa8GXwzCZg==

age: 328731I ran the above repeatedly inside a "watch" command for a while and it always is gzipped. I have run Google Page Speed on this site a bunch of times over the past few days, and it keeps reporting various scripts as not being gzipped... but which ones it reports as not being compressed is quite random. Sometimes it only mentions 4 scripts, sometimes it's 6, sometimes it's 9. |

|

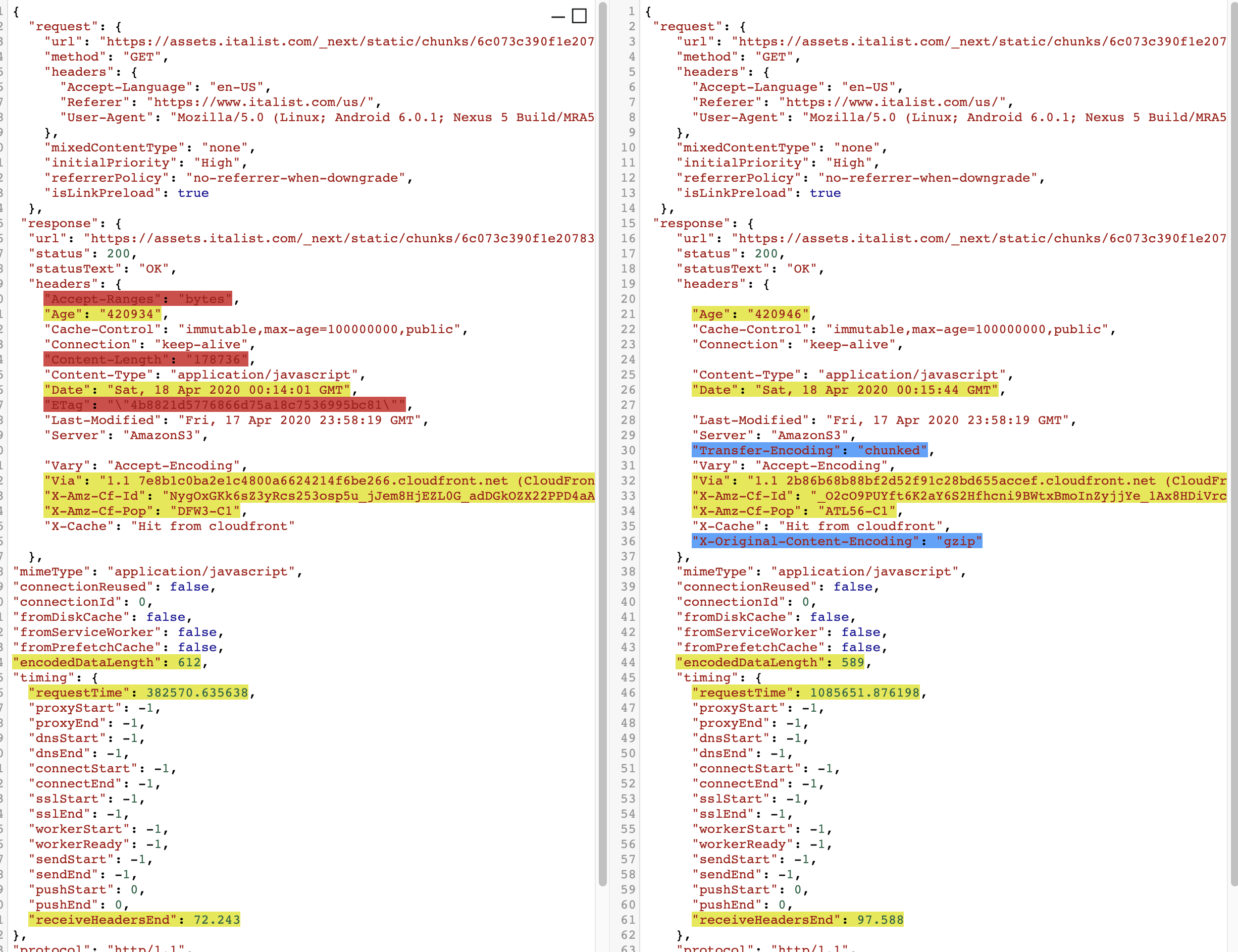

@manzoid i took a quick look and i am seeing different results depending on which servers make the network request Here's a diff. On the left is a request made from central-mid US. On the right is a request made from the Southeastern coast of the US. The requests look identical to me. But you can see the differences in the response headers. The "chunked" encoding is the weirdest thing. That doesn't make much sense for JS, so perhaps whatever config is behind that is causing these problems? |

this may be a problem on the PSI side... requires some investigation. |

|

@paulirish thank you, please let me know if there's any investigation our team can contribute. As this appears to be regional, we can check anything you recommend from various spots around the world, and if it helps, I can open a ticket with AWS/Cloudfront about this as well. I'm just not currently sure what to ask them to do, as Chrome itself in actual use doesn't seem to demonstrate the problem in any region. |

|

Thanks, @manzoid for pitching in more insights to this issue. As @manzoid said please let us know in case of any contribution from our side to fix this sooner. @paulirish |

|

hi @paulirish @exterkamp this particular problem seems to have mostly gone away. Is this expected? Did something change-- thank you |

|

Do you happen to know when it went away? We updated PSI in the last few days. |

|

hi @connorjclark Only anecdotal as I wasn't re-checking every single day but it seems to have been right around when v6 was released? like ~10d or ~2w ago or so |

|

We ran into this problem today. When running Lighthouse we got the recommendation to compress our files, but when checking the files in console we saw that they were compressed with gzip and had all the correct headers. When checking our Cloudfront distribution we confirmed that we have the "Compress objects automatically" set to "Yes", so we were confused as to why Lighthouse would be telling us that the files were uncompressed. Because of the information mentioned in this issue about inconsistency between different locations I suspected that it had to due with the Cloudfront Price Class configuration. We switched our Price Class configuration from "Use only North America and Europe" to "Use all edge locations (best performance)" and re-ran Lighthouse, the issue was resolved. Does Lighthouse run tests from multiple locations or just my local network? |

If you run LH in DevTools / Node, it is local. Otherwise, for PSI/web.dev, it runs on a google server selected based on proximity to where you are. My suspicion is that the CF edge server/proxying will modify responses as they make their way to the requestor, but at some point will stop setting the "X-Original-Content-Encoding: gzip" header, as seen in #10610 (comment)

The response headers shown in the comment I linked to above is exactly what we are getting from CF, so there isn't anything to be done on our side, we'll have to contact CF for a fix I think. Do you happen to have a good contact within their company (a sales/eng rep?) I'm making inquiries internally now but aren't sure I'll get a contact. EDIT: Actually, I had forgtten that |

|

@danroestorf do you have a URL / and is it still in a fixed or broken state wrt LH gzip reporting? |

|

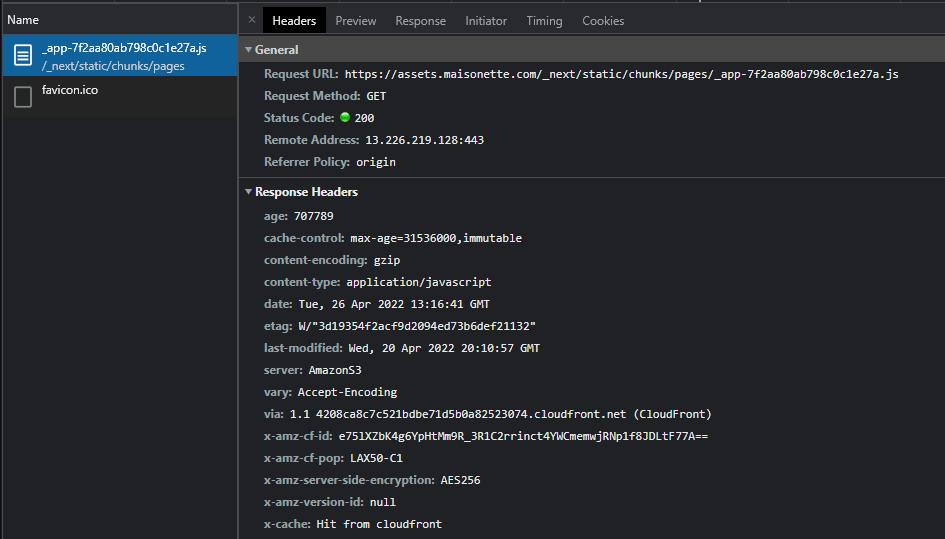

@connorjclark We see inconsistent results between Lighthouse runs for this page https://pagespeed.web.dev/report?url=https%3A%2F%2Fwww.maisonette.com%2Fproduct%2Fmusical-lili-llama On my current run I get these recommendations: But when opening the first file in that list and checking the headers I see I've started to suspect that the inconsistency isn't with Lighthouse, but instead with Cloudfronts automatic compression of files. This is because we were able to find files that were not gzipped when checking dev tools. One thing that sticks out is that the recommendation says to enable the compression on our next server, but these assets are being served from an S3 origin. |

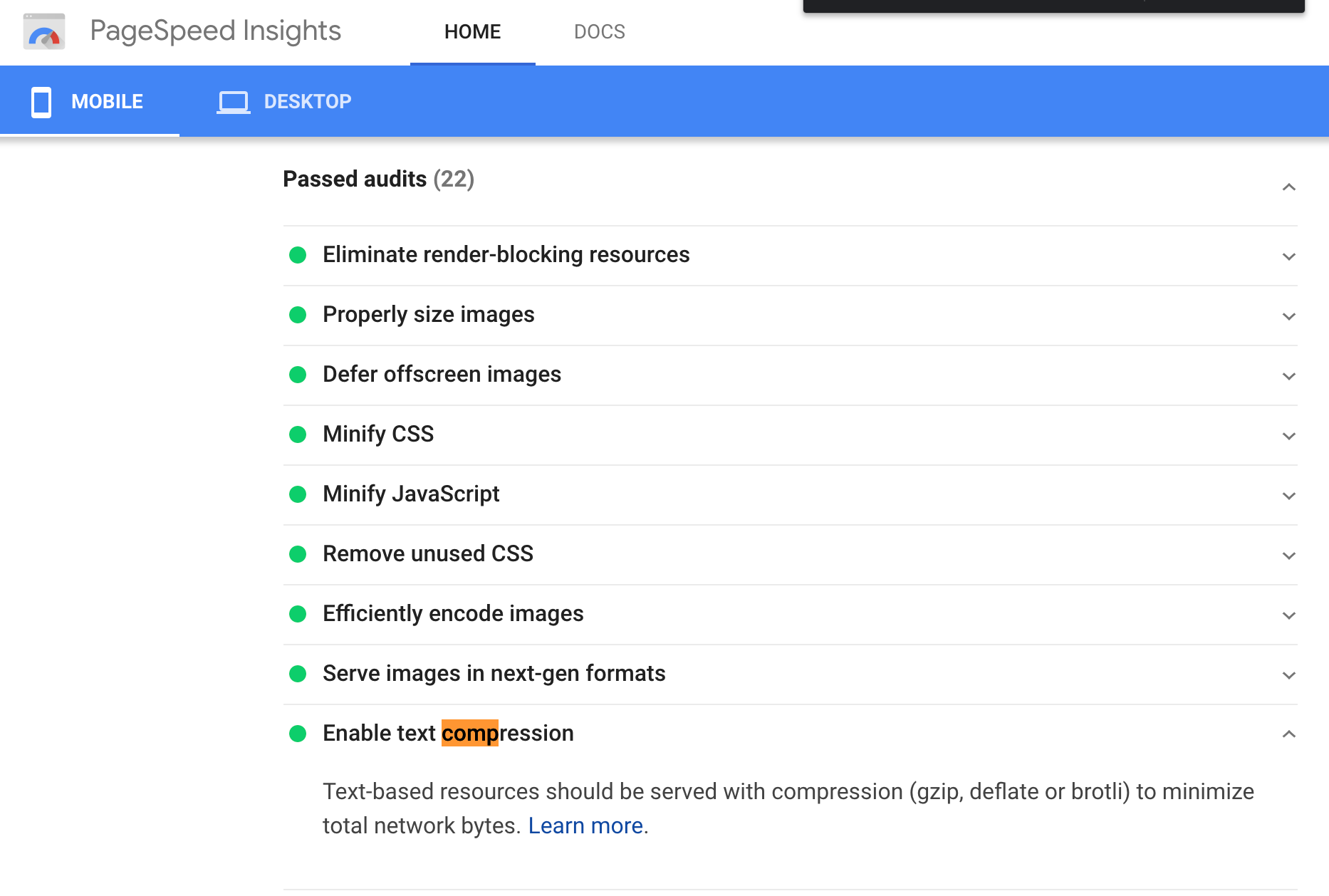

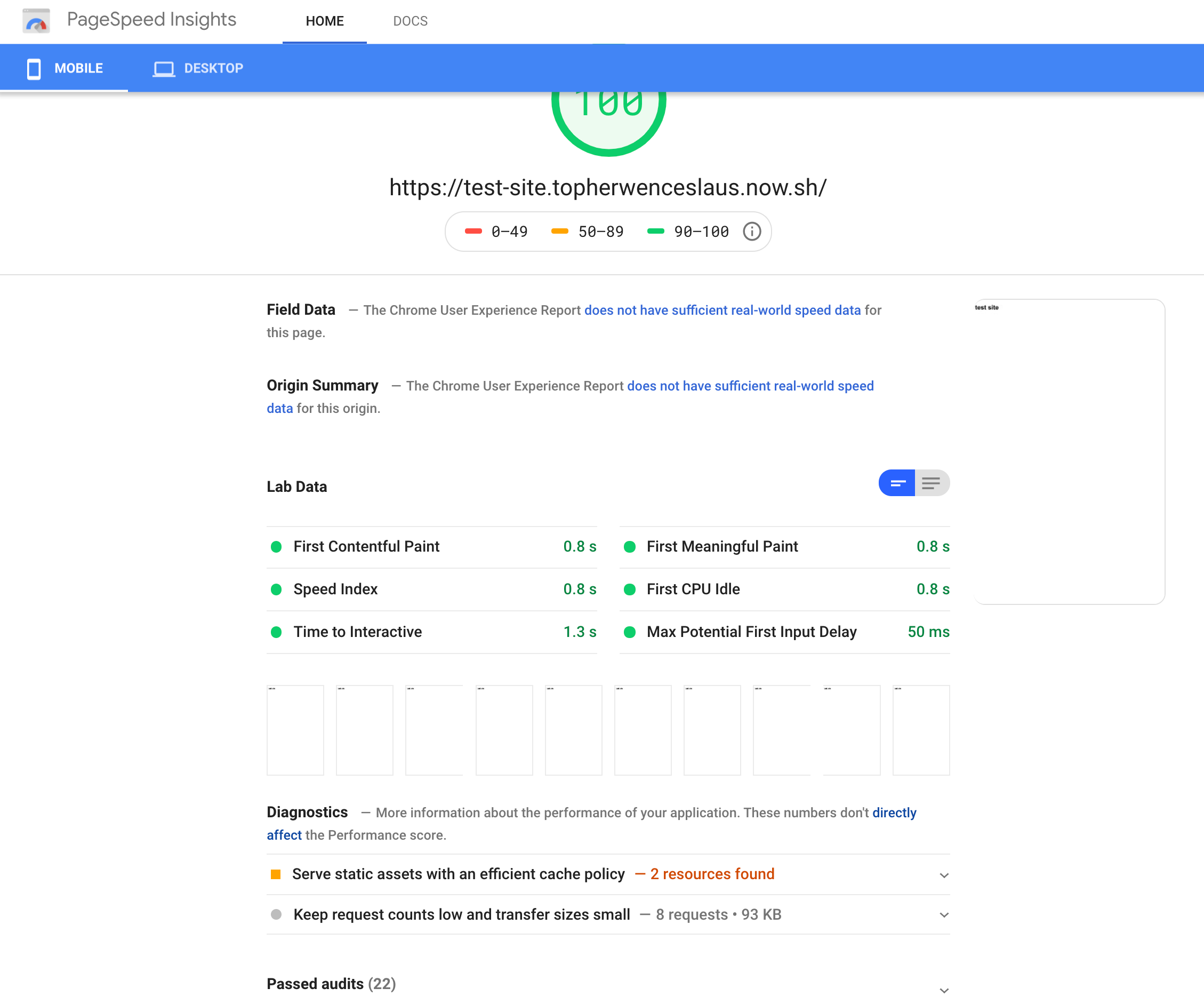

We are in the process of benchmarking our SDK scripts across sites. LH shows our scripts are gzip enabled and text compression audits are passed but PageSpeed Insights reports an opportunity to compress files.

Provide the steps to reproduce

What is the current behavior?

The discrepancy in LH vs PageSpeed Insights audits.

What is the expected behavior?

Both should show the same audits.

Environment Information

Related issues

The text was updated successfully, but these errors were encountered: